How to Combine Models with Stacking

MartinLiebig

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified Expert, University ProfessorPosts:3,362

MartinLiebig

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified Expert, University ProfessorPosts:3,362At some point in your analysis you come at the point where you want to boost your model performance. The first step for this would be to go in the feature generation phase and search for better attribute combination for your learners.

As a next step, you might want to boost the performance of your machine learning method. A pretty common approach for this is called ensemble learning. In ensemble learning you build a lot of different (base) learners. The results of these base learners are combined or chained in different ways. In this article, we will focus on a technique called Stacking. Other approaches are Voting, Bagging or Boosting. These methods also available in RapidMiner.

In Stacking you have at least two algorithms called base learners. These learners do what they do if you would train them on the data set itself separately. You use the base learners on the data set which results in adata set containing your usual attributes and the prediction of your base learners.

Afterwards you use another algorithm on this enriched data set which uses the original attributes and the results of the previous learning step.

In essence, you use two algorithms to build an enriched data set so that a third algorithm can deliver better results.

Problem and Learners

To illustrate this problem, we have a look at a problem called Checkerboard. The data has two attributes att1 and att2 and is structured with square patches belonging to one group.

Let’s try to solve this problem with a few learners and see what they can archive. To see what the algorithm found we can apply our model on random data. Afterwards we do a scatter plot with prediction on the colour axis to investigate decision boundaries. The results for each algorithm are depicted below.

Naïve Bayes:By design Naïve Bayes can only model a n-dimensional ellipsoid. Because we are working in two dimensions, Naïve Bayes tries to find the most discriminating ellipse. As seen below it puts one on the origin. This is the only pattern it can recognize.

k-NN:We also try k-NN with cosine similarity as distance measure (Euclidian distance would solve the problem well on its own). With cosine similarity k-NN can find angular regions as a pattern. The result is that k-NN finds a star like pattern and recognizes that the corners are blue. It fails to recognize the central region as a blue area.

Decision Tree:A Decision Tree model fails to discriminate. The reason for this is, that the decision tree looks at each dimension separately. But in each dimension the data is uniformly distributed. A Decision Tree finds no cut to solve this.

Stacking

Now, let’s “stack” these algorithms together. We use k-NN and Naïve Bayes as a base learner and Decision Tree to combine the results.

The decision tree will get the results of both base learners as well as the original attributes as input:

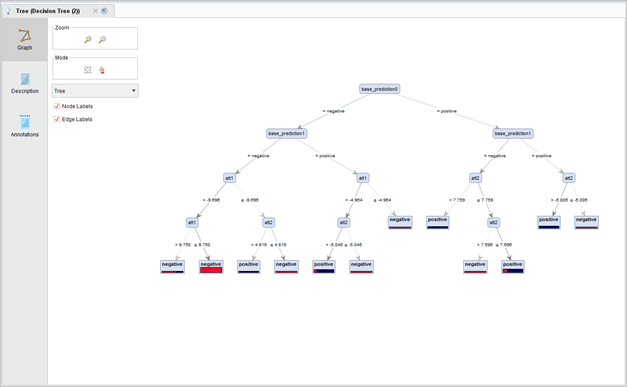

Where base_prediction0 is the result of Naïve Bayes and base_prediction1 is the result of k-NN. The tree can thus pick regions where it trusts different algorithms. In those areas, the tree can even split into smaller regions. The resulting tree looks like this:

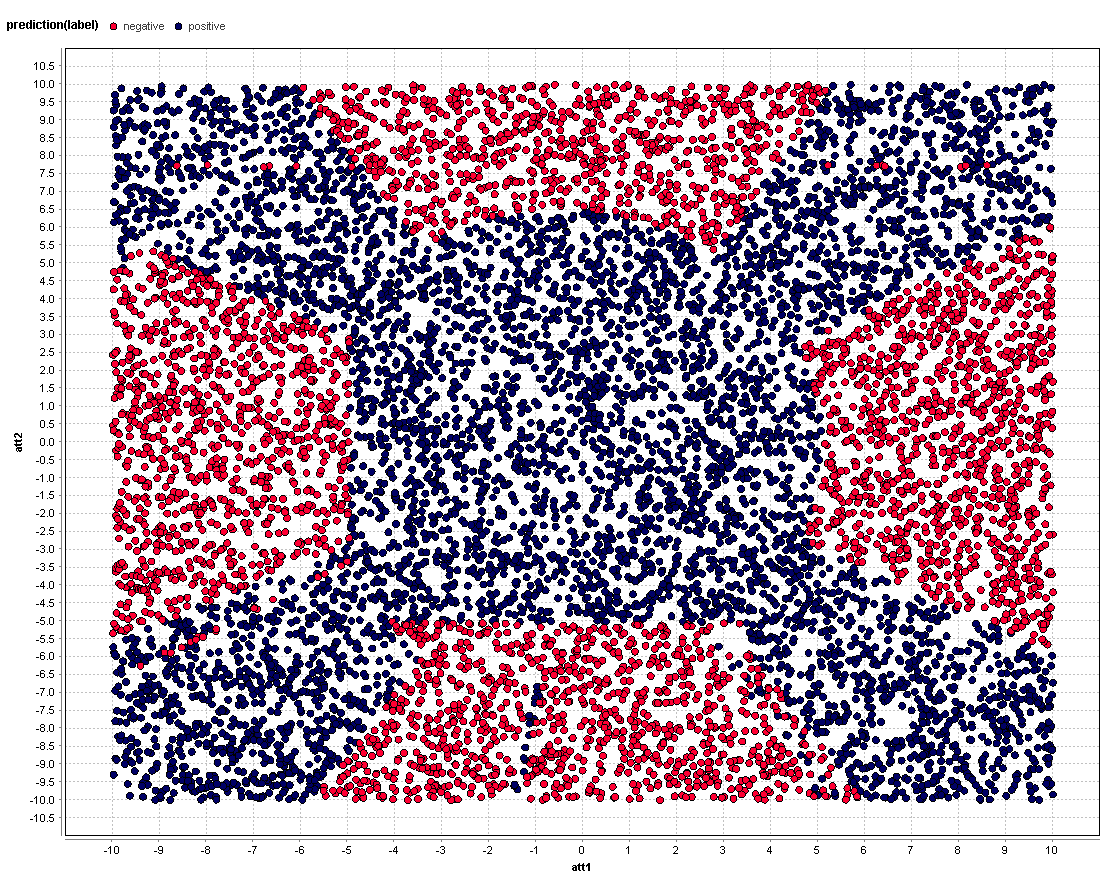

Applied on random test data we get a result which is depicted below.

This is an impressive result. We take two learners which are not creating good results by their own and combine them with a learner which was not able to do anything on the data set and get a good result.

Dortmund, Germany

Comments

Hi! Thank you for the nice article. Please, could you post the xml file or a link with the process and dataset.

Thank you.

Hi@mschmitz,

First, thanks for all the great work and nice tutorial on stacking. Is it possible to create a stacking of other stackings? Sort of like a meta-meta-model. I know this has been done in data competitions (i.e., Kaggle) and I was just testing it out. Below there's an xml of a process I created using the 'stacking' tutorial process in RM as the basis. I'm getting the following message:

"The setup does not seem to contain any obvious errors, but you should check the log messages or activate the debug mode in the settings dialog in order to get more information about this problem"

I also tried a variant of this which was use a vote operator on multiple stacking operators but unfortunately I got the same 'process failed' message.

Let me know your thoughts.

Hi@acast,

this actually was a bug, fixed with 9.0.3

https://docs.www.turtlecreekpls.com/latest/studio/releases/changes-9.0.3.html

Greetings,

Jonas