Spark Job Failed - Connection from Cloudera to Radoop

Hello can someone help me. I get the following error message when I connecting Cloudera to Rapidminer Radoop. All services except Spark work and can be connected. What do I have to do that the Spark job runs as well? Thanks in advance.

[Jul 6, 2017 8:31:03 PM]: Integration test for 'myCloudera' started.

[Jul 6, 2017 8:31:03 PM]: Using Radoop version 7.5.0.

[Jul 6, 2017 8:31:03 PM]: Running tests: [Hive connection, Fetch dynamic settings, Java version, HDFS, MapReduce, Radoop temporary directory, MapReduce staging directory, Spark staging directory, Spark assembly jar existence, UDF jar upload, Create permanent UDFs, HDFS upload, Spark job]

[Jul 6, 2017 8:31:03 PM]: Running test 1/13: Hive connection

(2017年7月6日下午8:31:03):服务器2 connectio蜂巢n (master.cluster.com:10000) test started.

[Jul 6, 2017 8:31:03 PM]: Test succeeded: Hive connection (0.177s)

[Jul 6, 2017 8:31:03 PM]: Running test 2/13: Fetch dynamic settings

[Jul 6, 2017 8:31:03 PM]: Retrieving required configuration properties...

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: hive.execution.engine

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: mapreduce.jobhistory.done-dir

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: mapreduce.jobhistory.intermediate-done-dir

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: dfs.user.home.dir.prefix

(2017年7月6日下午8:31:04):无法获取财产dfs.encryption.key.provider.uri

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.executor.memory

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.executor.cores

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.driver.memory

(2017年7月6日下午8:31:04):无法获取财产spark.driver.cores

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.yarn.executor.memoryOverhead

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.yarn.driver.memoryOverhead

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.dynamicAllocation.enabled

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.dynamicAllocation.initialExecutors

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.dynamicAllocation.minExecutors

[Jul 6, 2017 8:31:04 PM]: Successfully fetched property: spark.dynamicAllocation.maxExecutors

(2017年7月6日下午8:31:04):无法获取财产spark.executor.instances

[Jul 6, 2017 8:31:04 PM]: The specified local value of mapreduce.job.reduces (2) differs from remote value (-1).

[Jul 6, 2017 8:31:04 PM]: The specified local value of mapreduce.reduce.speculative (false) differs from remote value (true).

[Jul 6, 2017 8:31:04 PM]: Test succeeded: Fetch dynamic settings (0.663s)

[Jul 6, 2017 8:31:04 PM]: Running test 3/13: Java version

[Jul 6, 2017 8:31:04 PM]: Cluster Java version: 1.8.0_131-b11

[Jul 6, 2017 8:31:04 PM]: Test succeeded: Java version (0.000s)

[Jul 6, 2017 8:31:04 PM]: Running test 4/13: HDFS

[Jul 6, 2017 8:31:04 PM]: Test succeeded: HDFS (0.291s)

[Jul 6, 2017 8:31:04 PM]: Running test 5/13: MapReduce

[Jul 6, 2017 8:31:04 PM]: Test succeeded: MapReduce (0.106s)

[Jul 6, 2017 8:31:04 PM]: Running test 6/13: Radoop temporary directory

[Jul 6, 2017 8:31:04 PM]: Test succeeded: Radoop temporary directory (0.306s)

[Jul 6, 2017 8:31:04 PM]: Running test 7/13: MapReduce staging directory

[Jul 6, 2017 8:31:05 PM]: Test succeeded: MapReduce staging directory (0.357s)

[Jul 6, 2017 8:31:05 PM]: Running test 8/13: Spark staging directory

[Jul 6, 2017 8:31:05 PM]: Test succeeded: Spark staging directory (0.316s)

[Jul 6, 2017 8:31:05 PM]: Running test 9/13: Spark assembly jar existence

[Jul 6, 2017 8:31:05 PM]: Spark assembly jar existence in the local:// file system cannot be checked. Test skipped.

[Jul 6, 2017 8:31:05 PM]: Test succeeded: Spark assembly jar existence (0.000s)

[Jul 6, 2017 8:31:05 PM]: Running test 10/13: UDF jar upload

[Jul 6, 2017 8:31:05 PM]: Remote radoop_hive-v4.jar is up to date.

[Jul 6, 2017 8:31:05 PM]: Test succeeded: UDF jar upload (0.300s)

[Jul 6, 2017 8:31:05 PM]: Running test 11/13: Create permanent UDFs

[Jul 6, 2017 8:31:06 PM]: Remote radoop_hive-v4.jar is up to date.

[Jul 6, 2017 8:31:06 PM]: Test succeeded: Create permanent UDFs (0.745s)

[Jul 6, 2017 8:31:06 PM]: Running test 12/13: HDFS upload

[Jul 6, 2017 8:31:07 PM]: Uploaded test data file size: 5642

[Jul 6, 2017 8:31:07 PM]: Test succeeded: HDFS upload (1.241s)

[Jul 6, 2017 8:31:07 PM]: Running test 13/13: Spark job

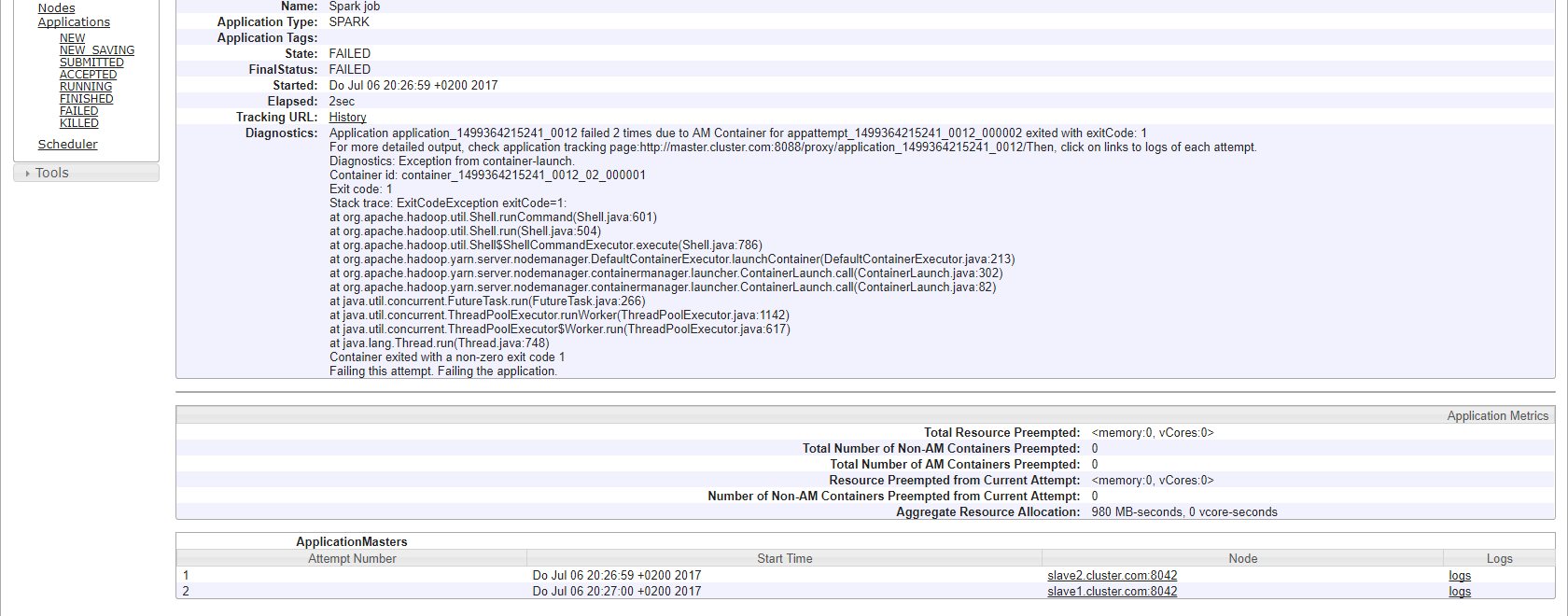

[Jul 6, 2017 8:31:47 PM] SEVERE: Test failed: Spark job

[Jul 6, 2017 8:31:47 PM]: Cleaning after test: Spark job

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: HDFS upload

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: Create permanent UDFs

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: UDF jar upload

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: Spark assembly jar existence

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: Spark staging directory

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: MapReduce staging directory

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: Radoop temporary directory

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: MapReduce

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: HDFS

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: Java version

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: Fetch dynamic settings

[Jul 6, 2017 8:31:48 PM]: Cleaning after test: Hive connection

[Jul 6, 2017 8:31:48 PM]: Total time: 45.007s

[Jul 6, 2017 8:31:48 PM] SEVERE: The Spark test failed. Please verify your Hadoop and Spark version and check if your assembly jar location is correct. If the job failed, check the logs on the ResourceManager web interface athttp://master.cluster.com:8088.

[Jul 6, 2017 8:31:48 PM] SEVERE: Test failed: Spark job

[Jul 6, 2017 8:31:48 PM] SEVERE: Integration test for 'myCloudera' failed.

Best Answer

-

phellinger

Employee, MemberPosts:103

phellinger

Employee, MemberPosts:103 RM Engineering

RM Engineering

The Spark assembly jar could not be found on the specified location. Since it is a local address, it means that the file / directory (Spark 2.x) must exist on all nodes at the specified path. So, for example, the defaultAssembly Jar Locationis "local:///opt/cloudera/parcels/CDH/lib/spark/lib/spark-assembly.jar", in that case, on all nodes this path must exist:/opt/cloudera/parcels/CDH/lib/spark/lib/spark-assembly.jar.

If it is somewhere else, the address must be modified. It is also possible to download arbitrary Spark library fromspark.apache.org, upload it the HDFS, and specify a HDFS location (with the prefix "hdfs://") and choose the proper Spark version.

Peter

1

Contributor I

Contributor I

Answers

Hello,

can you please follow the first "logs" link in the second screen? It should show the stderr, stdout outputs. If they are too long, than there are links to the full content. The error message and/or stacktrace from those should help more in figuring out the cause.

Thanks,

Peter

Log Type: stderr

Log Upload Time: Fr Jul 07 14:27:32 +0200 2017

Log Length: 213

Log Type: stdout

Log Upload Time: Fr Jul 07 14:27:32 +0200 2017

Log Length: 0

Could you please put the link to the jar. I am using 2.2 and have a connection problem

I can't fix the spark assembly jar file. I'm using spark 2.2.0 version.

I'm using Apache Hadoop on windows and I got this error: spark execution error. the spark job failed.

any help, please.