Auto Model process improvement suggestion

kypexin

Moderator, RapidMiner Certified Analyst, MemberPosts:290

kypexin

Moderator, RapidMiner Certified Analyst, MemberPosts:290 Unicorn

Unicorn

Hi guys,

I have noticed one small thing I would count as a subject for a potention improvement in the auto-generated auto-model process.

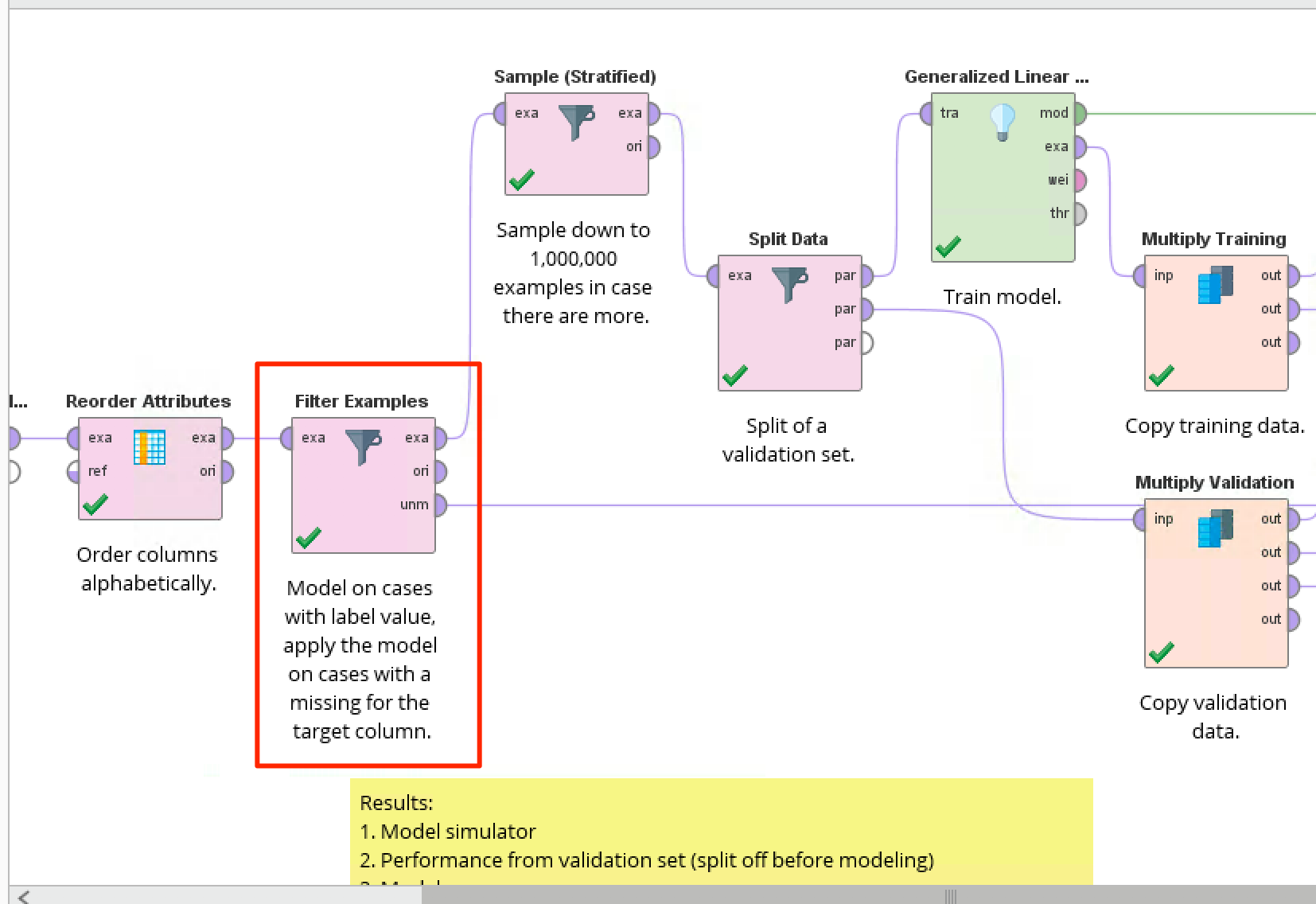

Before training the model, there's a filtering operator applied, which is used for the followiing:

"Model on cases with label value, apply the model on cases with a missing for the target column."

So later on, 'Explain predictions' is applied to 'unm' output of this filter:

The fact is, we don't necessarily have the data in this exact format (with missing labels for the examples to actually predict). On the contrary, I see much more often a use case where a whole labeled dataset is used for auto-modelling and evaluation thru 80/20 split.

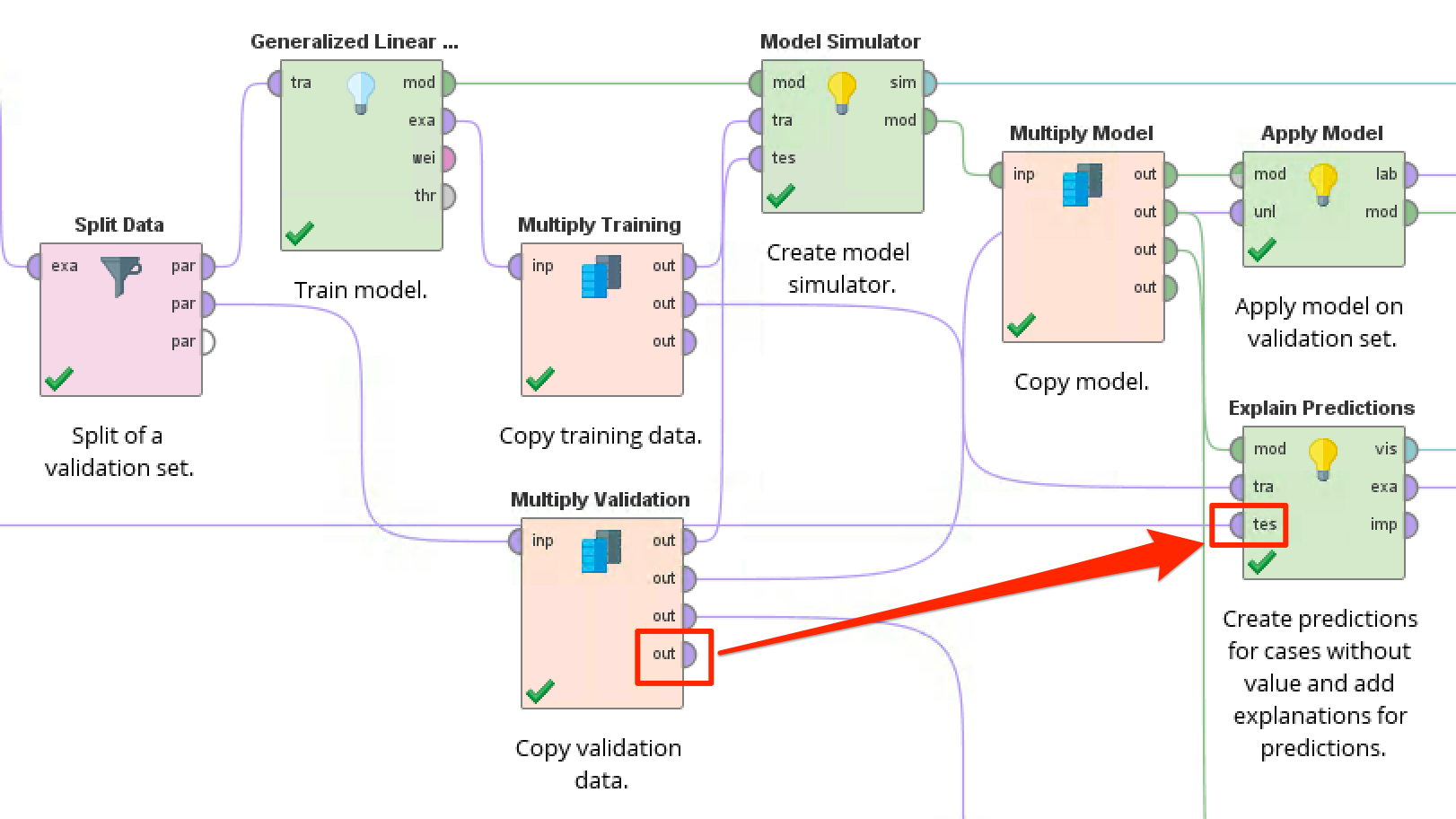

So, what I doevery timeI save the process from auto-model is rewiring operators in this way:

Which makes much more sense for me as I train the model on 80% of data and then apply on the remaining 20% which then also used for explaining the predictions. If I don't rewire operators I am by default getting empty result from 'Explain predictions', because this filter operator always sends 100% of data toexaport and 0% tounmport.

Do you think this can be improved in some way so the process is generated in a more flexible way, depending on the initial dataset format?

Comments

cc@IngoRM

Hi,

One easy option would be to create the predictions and explanations for both, the cases with missing labels (as it is now) and for the 20% test cases as well. In the UI, we could always show the explanations for the test cases and those for the unlabeled examples only if there has been any.

What do you think about this approach? Keep in mind though that explain predictions can be a bit slow in some cases - so if your data set is very large, the creation of the explanations for the 20% test cases might take a while...

Best,

Ingo

Hi@IngoRM,

Yes, I think this can be an easy option - to explain predictions for both unlabeled and 20% test examples.

Another thought I had would be a bit more complicated solution where Auto Model would automatically detect dataset structure it works with (is it fully labeled or with missing labels) and generate process depending on that.

Vladimir

http://whatthefraud.wtf