Criterion for overfitting evaluation

Learner I

Learner I

inHelp

Hello everyone. Have a nice day. I am getting someoverfittingtrouble. I have been searching the information on RM Community and the other websites. They told that if the accuracy is greater than 90%, I am most probaly facing tooverfitting. My case below:

I have the datasets like this:

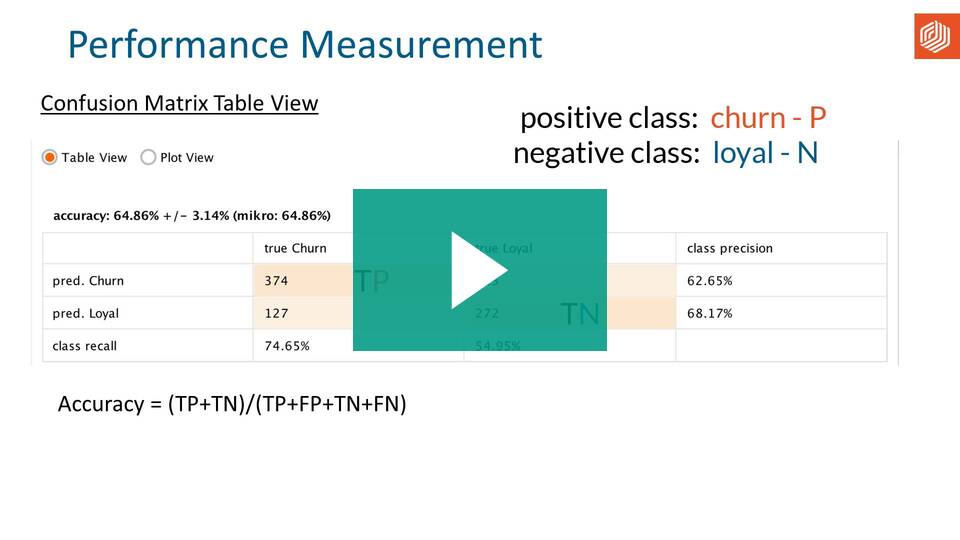

Then I created the process using classification (decision tree) with thebank-additional-full.csvas training data andbank-additional.csvas test data. After running, the accuracy is about 97% (and the correlation is about 79%).

I think this is overfitting. Is it correct? If yes, how can I fix this problem? And is there only accuracy to evaluate the overfitting? Please help me. Thank you.

I have the datasets like this:

Then I created the process using classification (decision tree) with thebank-additional-full.csvas training data andbank-additional.csvas test data. After running, the accuracy is about 97% (and the correlation is about 79%).

I think this is overfitting. Is it correct? If yes, how can I fix this problem? And is there only accuracy to evaluate the overfitting? Please help me. Thank you.

Tagged:

0

Best Answers

-

MarcoBarradas

Administrator, Employee, RapidMiner Certified Analyst, MemberPosts:267

MarcoBarradas

Administrator, Employee, RapidMiner Certified Analyst, MemberPosts:267 Unicorn

Hi@Hung_Bui_221

Unicorn

Hi@Hung_Bui_221

This videos might help you clarify. You might have an accuracy of 97% whats the recall on the thing that you are trying to predict?

https://academy.www.turtlecreekpls.com/learn/article/overfitting-outliers

1 -

BalazsBarany

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified ExpertPosts:932

BalazsBarany

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified ExpertPosts:932 Unicorn

Hi!

Unicorn

Hi!

Just having a high accuracy doesn't mean that you have overfitting. You could also have a good model.

Look at the decision tree and try different pruning parameter settings to control the possibility of overfitting. You'll be able to see if the tree is getting very complex and making nonsensical decisions (like "if first name = Peter thenLabel") or not.

一个overfitted model doesn't work well on new data. Therefore, you just need to make sure that you verify correctly. See these videos in the Academy:

https://academy.www.turtlecreekpls.com/learn/video/validating-a-model

https://academy.www.turtlecreekpls.com/learn/video/optimization-of-the-model-parameters

Regards,

Balázs1 -

BalazsBarany

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified ExpertPosts:932

BalazsBarany

Administrator, Moderator, Employee, RapidMiner Certified Analyst, RapidMiner Certified ExpertPosts:932 Unicorn

Hi!

Unicorn

Hi!

Bagging and other ensemble methods can help reduce overfitting and make models more robust. When you obtain 10 trees in a bagging model,that's the model. It is probably as good or better than just one tree.

With tree based methods, correlation is not that big of a problem. When one attribute is selected for a split, correlated attributes don't really matter.

If you suspect that the correlation of polynominal attributes might be worse for your model, you should validate that assumption. A good way to test this is using Nominal to Numerical which will re-code the nominal attribute values to new 0/1 attributes. Then you could apply similar correlation based filters.

Regards,

Balázs1

一个swers

Besides, I have 2 more questions:

1. In Optimize, I use Bagging (with Decision Tree inside) because as I known, this is also a way to reduce overfitting issue. Is it correct? After running, I obtained 10 trees. How can I know which tree should be chosen?

1. As I known, the highly correlated attributes should be removed. So I used Weight by Correlation for numerical and binominal attributes and then removed which ones have correlation greater than 0.95. But how about polynominal attributes? At first I used Weight by Information Gain and Select by Weight for them. Then I was confused and change into Correlation Matrix for all attributes like the image above. In this case, what should I do?

Sorry for long post. And thank you again for noting my questions.