You are viewing the RapidMiner Radoop documentation for version 9.9 -Check here for latest version

Azure HDInsight 3.6

RapidMiner Radoop supports version 3.6 of Azure HDInsight, a cloud-based Hadoop service that is built upon Hortonworks Data Platform (HDP) distribution. If RapidMiner Radoop does not run inside the Azure network, there are a couple of options for the networking setup. A solution likeAzure ExpressRouteor a VPN can simplify the setup. However, if those options are not available, the HDInsight clusters can be accessed using Radoop Proxy, which coordinates all the communication between RapidMiner Studio and the cluster resources. Since this setup is the most complex, this guides assumes this scenario, feel free to skip steps that are not required because of an easier networking setup.

Connecting to an Azure HDInsight 3.6 cluster using Radoop Proxy

For a proper networking setup, a RapidMiner Server instance (withRadoop Proxyenabled) should be installed on an additional machine that is located in the same virtual network as the cluster nodes. The following guide provides the necessary steps for establishing a proxied connection to an HDInsight cluster.

Starting an HDInsight cluster

If you already have an HDInsight cluster running in the Azure network, skip these steps entirely.

Create a newVirtual networkfor all the network resources that will be created during cluster setup. The defaultAddress spaceandSubnet address rangemay be suitable for this purpose. Use the sameResource groupfor all resources that are created during the whole cluster setup procedure.

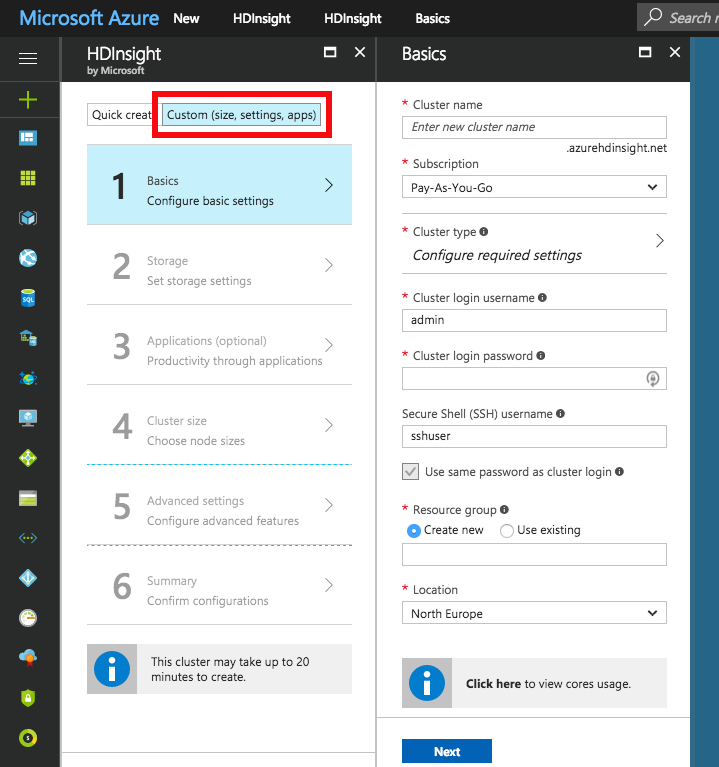

Use theCustom (size, settings, apps)option instead ofQuick createfor creating the cluster. ChooseSparkcluster type withLinuxoperating system, and the latest Spark version supported by Radoop, which isSpark 2.2.0 (HDI 3.6)as of this writing. Fill all the required login credential fields. Select the previously definedResource group.

Choose thePrimary storage typeof the cluster. You may specify additional storage accounts as well.

- Azure Storage: Provide a new or already existingStorage accountand aDefault containername. You may connect to as many Azure Storage accounts as needed.

- Data Lake Store: Provide aData Lake Store account. Make sure that the root path exists and the associated Service principal has adequate privileges for accessing the chosen Data Lake Store and path. Please note that a Service principal can be re-used for other cluster setups as well. For this purpose, it is recommended to save theCertificatefile and theCertificate passwordfor future reference. Once a Service principal is chosen, the access rights for any Data Lake Stores can be configured via this single Service principal object.

Configure theCluster sizeof your choice.

OnAdvanced settingstab, choose the previously createdVirtual networkandSubnet.

After getting through all the steps of the wizard, create the cluster. After it has started, please find the private IPs and private domain names of the master nodes. You will need to copy these to your local machine. This step is required because some domain name resolutions need to take place on the client (RapidMiner Studio) side. The easiest way to do this is by copying it from one of the cluster nodes. Navigate to the dashboard of your HDInsight cluster, and select theSSH + Cluster loginoption. Choose any item from theHostnameselector. On Linux and Mac systems you can use the ssh command appearing below the selector. On Windows systems you will have to extract the hostname and the username from the command, and usePuTTYto connect to the host. The password is the one you provided in step 2. Once you are connected, view the contents of the /etc/hosts file of the remote host, for example by issuing the following command:

cat /etc/hosts. Copy all the entries with long, generated hostnames. Paste them into thehostsfile of yourlocal machine, which is available at the following location:- For Windows systems: Windows\system32\drivers\etc\hosts - For Linux and Mac systems: /etc/hosts

Starting RapidMiner Server and Radoop Proxy

创建一个新的RapidMiner服务器virtual machine in Azure. For this you will need to select the"Create a resource"option and search the Marketplace for RapidMiner Server. Select theBYOLversion which best matches your Studio version. PressCreateand start configuring the virtual machine. Provide the Basic settings according to your taste, but make sure that you use the previously configuredResource groupand the sameLocation至于你的集群。点击Ok, then select a virtual machine size with at least 10GB of RAM. Configure optional features. It is essential that the sameVirtual networkandSubnetare selected in theNetworksettings as the ones used for the cluster. All other settings may remain unchanged. Check the summary, then clickCreate.

Once the VM is started, you still need to wait a few minutes for RapidMiner Server to start. The easiest way to validate this is to open (Public IP address of the VM):8080 in your browser. Once that page loads, you can log in withadminusername and the name of your VM in Azure as password. You will immediately be asked for a valid license key. A free license is perfectly fine for this purpose. If your license is accepted you can close this window, you will not need it anymore.

Setting up the connection in RapidMiner Studio

First, create a Radoop Proxy Connection for the newly installed Radoop Proxy (describedherein Step 1). The needed properties are:

| Field | Value |

|---|---|

| Radoop Proxy server host | Provide the IP address of the MySQL server instance. |

| Radoop Proxy server port | The value ofradoop_proxy_portin the used RapidMiner Server install configuration XML (1081by default). |

| RapidMiner Server username | admin(by default) |

| RapidMiner Server password | name of Azure proxy VM(by default) |

| Use SSL | false(by default) |

Forsetting up a new Radoop connectionto an Azure HDInsight 3.6 cluster, we strongly recommend to choose![]() Import from Cluster Manageroption, as it offers by far the easiest way to make the connection work correctly.This部分描述了集群管理器导入过程ss. The集群管理器URLshould be the base URL of the Ambari interface web page (e.g.

Import from Cluster Manageroption, as it offers by far the easiest way to make the connection work correctly.This部分描述了集群管理器导入过程ss. The集群管理器URLshould be the base URL of the Ambari interface web page (e.g.https://radoopcluster.azurehdinsight.net). You can easily access it by clickingAmbari Viewson the cluster dashboard.

After the connection is imported, most of the required settings are filled automatically. In most cases, only the following properties have to be provided manually:

| Field | Value |

|---|---|

| Advanced Hadoop Parameters | Disable the following properties:io.compression.codec.lzo.classandio.compression.codecs |

| Hive Server Address | This is only needed, if you do not use the ZooKeeper service discovery(Hive High Availabilityis unchecked). Can be found on Ambari interface (Hive / HiveServer2). In most cases, it is the same as theNameNode address. |

| Radoop Proxy Connection | The previously created Radoop Proxy Connection should be chosen. |

| Spark Version | Select the version matching the Spark installation on the cluster, which isSpark 2.2if you followed above steps for HDInsight install. |

| Spark Archive (or libs) path | ForSpark 2.2(with HDInsight 3.6), the default value is (local:///usr/hdp/current/spark2-client/jars). Unless using a different Spark version you are fine with leavingUse default Spark pathcheckbox selected. |

| Advanced Spark Parameter | Createspark.yarn.appMasterEnv.PYSPARK_PYTHONproperty with a value of/usr/bin/anaconda/bin/python. |

You will also need to configure your storage credentials, which is described by theStorage credentials setupsection. If you want to connect to a premium cluster you will need to follow the steps in theConnecting to a Premium clustersection. Once you completed these steps, you can clickOKon theConnection Settingsdialog, and save your connection.

It is essential that the RapidMiner Radoop client can resolve the hostnames of the master nodes. Follow the instructions ofStep 6of theStarting an HDInsight clusterto add these hostnames to your operating system’s hosts file.

Storage credentials setup

An HDInsight cluster can have more storage instances attached, which may even have different storage types (Azure StorageandData Lake Store). For accessing them, the related credentials must be provided inAdvanced Hadoop Parameterstable. The following sections clarify the type of credentials needed, and how they can be acquired.

It is essential that the credentials of theprimary storageare provided.

You may have multiple Azure Storages attached to your HDInsight cluster, provided that any additional storages were specified during cluster setup. All of these have access key(s) which can be found atAccess keystab on the storage dashboard. To enable access towards an Azure Storage, provide this key as anAdvanced Hadoop Parameter:

| Key | Value |

|---|---|

fs.azure.account.key. |

the storage access key |

正如上面提到的,一个年代ingleActive Directory service principalobject can be attached to the cluster. This controls the access rights towards Data Lake Store(s). Obviously, only one Data Lake Store can take the role of the primary storage. In order to enable Radoop to access a Data Lake Store through this principal, the followingAdvanced Hadoop Parametershave to be specified:

| Key | Value |

|---|---|

dfs.adls.oauth2.access.token.provider.type |

ClientCredential |

dfs.adls.oauth2.refresh.url |

OAuth 2.0 Token Endpoint address |

dfs.adls.oauth2.client.id |

Service principal application ID |

dfs.adls.oauth2.credential |

Service principal access key |

You can acquire all of these values underAzure Active Directorydashboard (available at the service list of the main Azure Portal). ClickApp registrationson the dashboard, then look for the needed values as follows:

- ForOAuth 2.0 Token Endpoint address, go toEndpoints, and copy the value ofOAuth 2.0 Token Endpoint.

- OnApp registrationspage, choose the Service principal associated with your HDInsight cluster, and provide the value ofApplication IDasService principal application ID.

- 点击Keys. Generate a new key by entering a name and an expiry date, and replace the value ofService principal access keywith the generated password.

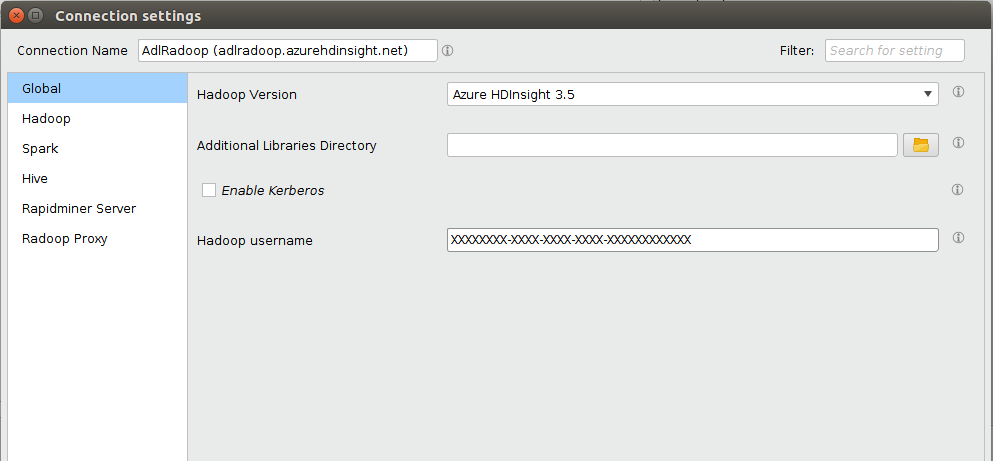

Finally, go to the HDInsight cluster main page, and clickData Lake Store accessin the menu. Provide the value ofService Principal Object IDasHadoop Username.

Connecting to a Premium cluster (having Kerberos enabled)

If you have set up or have a Premium HDInsight cluster (subscription required), some additional connection settings are required for Kerberos-based authentication.

- Configuring Kerberos authenticationsection describes general Kerberos-related settings.

- As for all Hortonworks distribution based clusters, you also have to apply a Hive setting (

hive.security.authorization.sqlstd.confwhitelist.append) described inthis section. Please note that a Hive service restart will be needed. - Westronglyadvise to use

Import from Cluster Manageroption for creating a Radoop connection to the Kerberized cluster. The import process covers some necessary changes inAdvanced Hadoop Parametersthat are required for the connection to work as expected.

Import from Cluster Manageroption for creating a Radoop connection to the Kerberized cluster. The import process covers some necessary changes inAdvanced Hadoop Parametersthat are required for the connection to work as expected.