RapidMiner Radoop Operators

This section provides an overview of the Radoop operator group. For a complete list of these operators, seeRapidMiner Radoop Operator Reference (PDF).

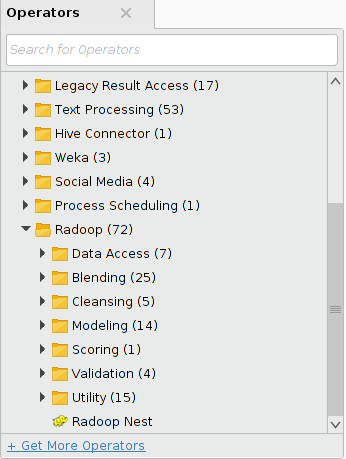

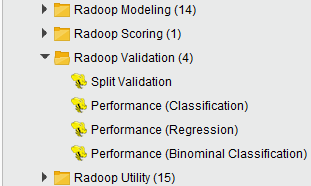

When you install the RapidMiner Radoop extension, several new operators become available in the Radoop operator group of RapidMiner Studio:

To help understand where to look for certain functions and the different types of operators available, this section provides an overview of each operator group. For full operator descriptions, see the help text within RapidMiner Studio.

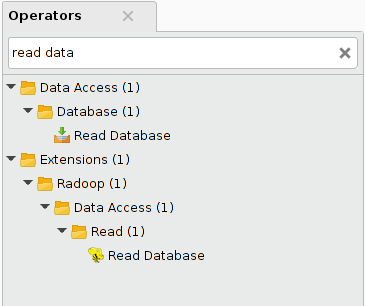

All operators mentioned here are part of the Radoop operator group inside the Extensions group. An operator by the same name may exist as part of the standard RapidMiner operators. The Radoop operator is listed in the Radoop folder and also indicated by a different icon, (such as![]() ). Searching onRead Databasefor example, returns these results:

). Searching onRead Databasefor example, returns these results:

Data Access group

The following operators are part of theData Accessgroup:

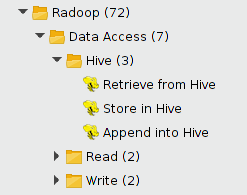

Hive subgroup

The following operators are part of theHivesubgroup:

Note: They are referred to in shorthand below for easier reading.

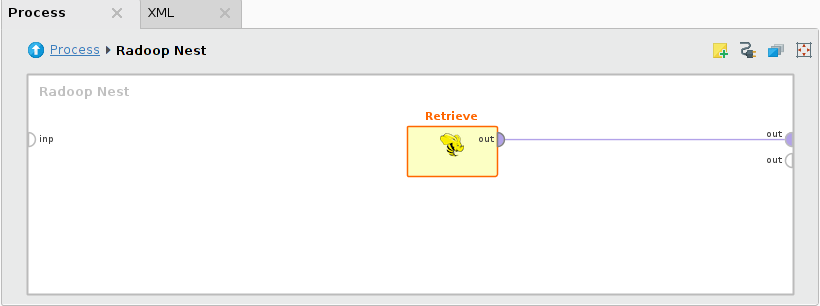

A process accesses data that already resides on the cluster in a Hive table using theRetrieve操作符。Retrieveonly loads references and metadata into memory; the data remains on the cluster to be processed with further operators. Connect the output port ofRetrievedirectly to theRadoop Nestoutput to fetch the data sample from memory.

TheStoreandAppendoperators write the data on their input ports into a Hive table. Typically this is time consuming, as the cluster has to carry out all the tasks defined by previous data processing operators before writing the data to the HDFS.Appendverifies that the data on its input fits into the specified Hive table.

You can also select tables from arbitrary other Hive databases in all operators in this subgroup.

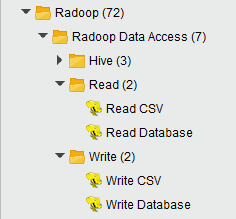

Read subgroup

Data must reside on the cluster to be processed and analyzed by Radoop operators. Smaller data sets may fit into memory; when you connect an ExampleSet to one of theRadoop Nest输入端口,Radoop进口。佛r larger data sets, use an operator from theReadgroup — eitherRead CSVorRead Database.

Read CSVcreates a Hive table from a file that resides on the client, the HDFS, or on Amazon S3. With the help of a wizard, you can define the separator and the name of the attributes (or set to read them from the file), as well as modify the type or set the attribute roles.

Read Databasequeries arbitrary data from a database and writes the results to the cluster. The database must be accessible from the client, which writes the data to the HDFS (using only a minimal memory footprint on the client). Radoop supports MySQL, PostgreSQL, Sybase, Oracle, HISQLDB, Ingres, Microsoft SQL Server, or any other database using an ODBC Bridge. If you want to import from a database in a direct or parallel manner (leaving the client out of the route), look for a solution likeSqoop.

Write subgroup

Writeoperators (Write CSVandWrite Database) write the data referenced by the HadoopExampleSet on their input to a flat file on the client. Alternatively, they can write the data into a database through the specified database connection. TheStoreoperator can be used to write the data to the HDFS or to S3.

This guide contains more detailed information ondata importoperators in a later section.

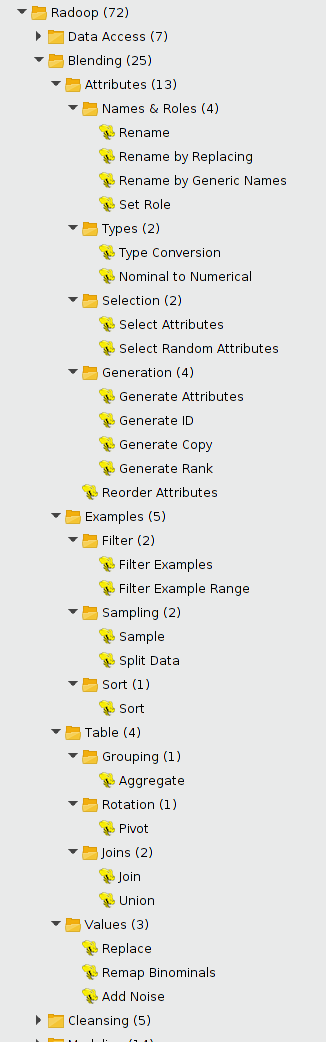

Blending group

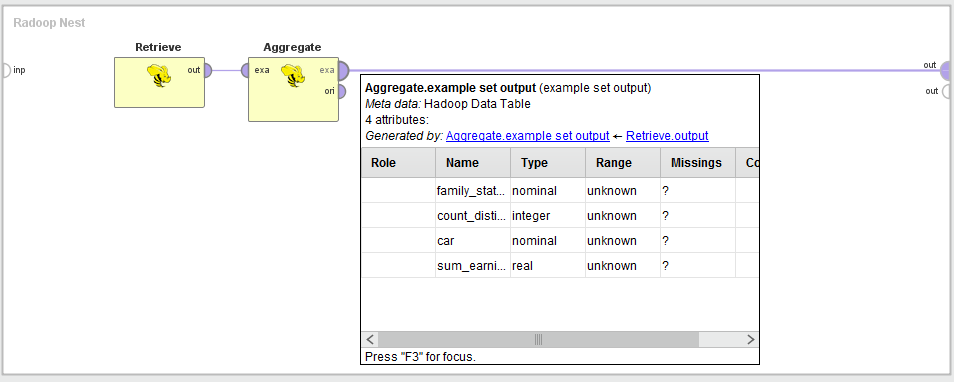

This is the largest group of Radoop operators; it contains all blending operators organized into subgroups. The operators usually take a HadoopExampleSet as input, and have two output ports — one for the transformed HadoopExampleSet and one for the original. While designing, hover over the first output port to examine the structure or metadata after the transformation.

The following subgroups and operators are part of theBlendinggroup:

There are two standard transformation operators that can take multiple inputs. For combining multiple data sets (HadoopExampleSet objects) useJoinorUnionoperators from theSet Operationsgroup.Joinimplements the four types of join operations common in relational databases — inner, left, right, or full outer — and has two input ports and one output port.Uniontakes an arbitrary number of input data sets that have the same structure. The output data set is the union of these input data sets (duplicates are not removed).

Custom transformations can be implemented using one of the scripting operators in Radoop. They can be found in the Utility/Scripting group.

Cleansing group

This group contains operators that perform data cleansing. Like in theBlendinggroup, these operators also receive a HadoopExampleSet as input and have the transformed and original HadoopExampleSet outputs. You can manage the missing values of the input dataset by using theReplace Missing Valuesor theDeclare Missing Valuesoperator and get rid of duplicate examples with theRemove Duplicates操作符。

NormalizeandPrincipal Component Analysishave apreprocessing modeloutput. It can be used to perform the same preprocessing step on another dataset (with the same schema). To do this, use theApply Modeloperator in theScoringgroup.

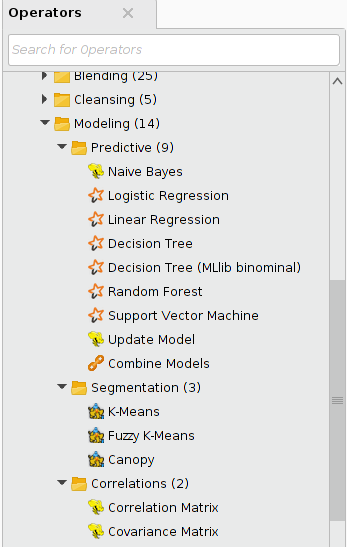

Modeling group

Besides ETL operators, Radoop also contains operators for predictive analytics. All the modeling algorithms in this group use either the MapReduce paradigm or Spark to take full advantage of the parallelism of a distributed system. They are ready to scale with the large volume of data on your cluster. This section only lists the operators; see the section onRadoop's predictive analytics featuresfor further explanation.

The operators in this group deal with the same type of model objects as core RapidMiner operators do. Thus, model output of a Radoop operator can be connected to the input port of a core RapidMiner operator and vice versa. Models trained on the cluster can be visualized in the same way as models trained on a data set in operative memory. The same compatibility is true for performance vector objects that hold performance criteria values calculated to review and compare model performance. These objects can also be easily shared between operators that operate on the cluster and those that use operative memory.

The following operators are part of theModelinggroup:

Predictive subgroup

Most operators in this groupimplement distributed machine learning algorithmsfor classification or regression. Their prediction model is built on the input HadoopExampleSet on the cluster. The model can be applied both to data on the cluster (see theScoringoperator group) or to data in operative memory by theApply Modelcore RapidMiner operator.

Update Modelimplements incremental learning (for Naive Bayes models only). Incremental learning means that an existing model is modified — its attributes are updated — based on new data and new observations. As a result, the machine learning algorithm does not have to build a new model on the whole data set, it updates the model by training on new records. The operator's expected inputs are the previously built model and a data set having the same structure as the one that the model was built on.

TheCombine Modelsoperator combines the trained prediction models on its input ports into a simple voting model — aBagging Model.

Segmentation subgroup

TheSegmentationgroup contains three different clustering operators. Each of them expects a HadoopExampleSet object on input and delivers that HadoopExampleSet, modified, on output. The algorithms add a new column that contains the result of the clustering; that is, clusters represented by nominal identifiers (cluster_0,cluster_1,cluster_2, etc.). The values identify the rows that belong to the same cluster.

The input data must have an ID attribute that uniquely identifies the rows. The attribute must have the "ID"role(use theSet Roleoperator). If there is no ID column on the data set, you can use the Radoop'sGenerate IDoperator to create one.

Correlations subgroup

TheCorrelation MatrixandCovariance Matrixoperators calculate correlation and covariance between attributes in the input data set. Correlation shows how strongly pairs of attributes are related. Covariance measures the degree to which two attributes change together.

Scoring group

TheScoringgroup contains theApply Model操作符。它一个模型适用于数据put port and supports all RapidMiner prediction models. You can train the model from a RapidMiner modeling operator on an ExampleSet in memory or on a Radoop operator on the Hadoop cluster. The operator can apply both prediction and clustering models.

Validation group

The following operators are part of theValidationgroup:

ThePerformance (Binominal Classification),Performance (Classification), andPerformance (Regression)operators each require a HadoopExampleSet object, having both label and predicted label attributes, as input. Each operator compares these results and calculates performance. You can select performance criteria using the operator's parameters. The generated performance vector object that contains these values is fully compatible with the I/O objects used by core RapidMiner operators.

TheSplit Validationoperator randomly splits an ExampleSet into a training and test set so that the model can then use one of the performance operators for evaluation.

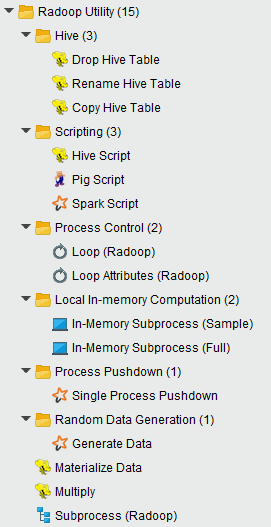

Utility group

This group contains many powerful operators, some of them need some experience with RapidMiner Radoop to use. The following operators are in theUtilitygroup:

TheSubprocessoperator lets you run a process inside a process. This operator is a great help in designing clear and modular processes. TheMultiplyoperator is required if you want to use the same HadoopExampleSet object in different branches of a process. The operator simply delivers the object on its input port to every connected output port.

TheMaterialize Data操作员执行所有延迟的计算input data set and writes the data to the distributed file system (into a temporal table). TheMultiplyoperator simply multiplies the selected input objects.

Hive subgroup

In this group the 3 table management operators do typical management operations on Hive tables. For example, you can useDropbefore anAppendoperator in a loop to make sure that a new process run starts with a new table. BothRenameandCopycan help in more complex processes (for example, that include loops) or they can manage tables of other processes that run before or after them. These tables to be manipulated can be from any Hive database that the user has access to.

Scripting subgroup

To perform custom transformation on your data, you can use either of the three most popular scripting languages on top of Hadoop:Hive,Pig, andSpark.Hive Script,Pig Script, andSpark Scriptoperators in theScriptinggroup let you write your own code. All three can take multiple input data sets and Pig Script and Spark Script can deliver multiple output data sets. Hive Script and Pig Script operators determine the metadata during design time, and also check whether the scripts contain syntax errors. The Spark Script operator does not handle metadata on its output and has no checks for syntax errors. Syntax highlighting is available for Spark scripts in Python.

Process Control subgroup

TheProcess Controlsubgroup contains operators related to organizing the steps of the process. In theLoopoperator subgroup,Loop Attributesiterates through the columns of a data set and performs an arbitrary Radoop subprocess in each iteration. The generalLoopoperator performs its inner subprocess an arbitrary number of times.

Local In-Memory Computation subgroup

TheLocal In-Memory Computationsubgroup contains operators that help you combine processing in the cluster with processing in memory.In-Memory Subprocess (Sample)fetches a sample from a data set in the cluster to memory and performs its inner subprocess on the sample there. This subprocess can use any of the hundreds of core RapidMiner operators.In-Memory Processing (Full)is similar, but it reads and processes the whole data set by performing iterations similar to theSampleversion. That is, it partitions the input data set intonchunks, then runs its in-memory subprocess on each of these chunks in a loop withniterations. It can append the resulting data sets of these subprocesses to create an output data set on the cluster. See the section onAdvanced Process Designfor more detail.

Process Pushdown subgroup

This group contains one of the most powerful operators in Rapidminer Radoop, theSingle Process Pushdown. This meta-operator can contain any operator from the RapidMiner Community Edition and from most extensions (e.g. Text processing, Weka). The subprocess inside theSingle Process Pushdownis pushed to one of the cluster nodes and executed using the node's memory. The result is available on the output as HadoopExampleSet (first output port) or as an in-memory IOObject, like a model or a performance vector (other output ports). More information and further hints on this operator can be found on theAdvanced Process Designpage.

Random Data Generation subgroup

If you need big data on Hive for testing purposes, use theGenerate Dataoperator in this group. It can generate huge amount of numerical data using the selected target function for calculating the label value.